Cogito ergo sum

“Cogito ergo sum” is a Latin phrase commonly translated as “I think, therefore I am”, which defines the basic requirement for self-awareness. The first original iteration of it was in French: “je pense, donc je suis”, which was first published by French philosopher René Descartes in 1637, in his Discourse on the Method dissertation, and later translated in the Latin “Cogito ergo sum”, in later writings.

The concept of self awareness was never applied to anything non-organic, until the earliest works of science fiction became popular in 1863 with Samuel Butler’s article Darwin among the Machines, where he first ponders about a future of self-replicating sentient machines, carrying the potential for supplanting humans as the dominant specie on Earth.

Since then, humanity has developed an obsession with thinking machines, pouring all fears, hopes, ambitions, and paranoias into this massive black box.

As a specie with equal parts of self-awareness and self preservation instincts, the thought of a new specie walking the Earth who could possibly replace us, can be unsettling on several grounds.

Introducing a new “player” in the food chain, creates a societal component by which a new specie, may that be artificial or extraterrestrial, introduces cultural challenges and conflict in regard to philosophical, ethical and even religious standpoints. Sentience allows for the ability to alter one’s own perception and interpret objective reality on subjective grounds, which, further down the “rabbit’s hole” may very well lead to AI-generated religions and philosophies.

Another aspect of introducing a new self-aware artificial organism into our ecosystem, is its effects on our economic equilibrium, which is already in a constantly precarious and delicate state on its own. The economic uncertainty introduced by a society capable of boosting technology advancement faster, and predictably beyond human capabilities, puts our specie at an obvious disadvantage.

Nothingburger

With that said, what is happening, right now, in 2024, that makes us so fearful of AI? The short answer is: a whole lot… of nothing.

As it is often true of all commercially available technology with disruptive capabilities, perception plays a key role in determining the success of its marketing. This is why all major AI players are very careful about being overly detailed in what they are actually selling.

By this token, just because I love throwing a good monkey-wrench, here is what AI is, from the word of Meta’s very own AI, when asked the definition of Artificial Intelligence:

“Artificial Intelligence (AI) refers to the ability of machines or computer programs to perform tasks that typically require human intelligence, such as learning, problem-solving, decision-making, and perception. AI systems use algorithms and data to make predictions, classify objects, understand natural language, and other cognitive tasks.

There are various types of AI, including:

- Narrow or Weak AI, which focuses on a specific task or domain.

- General or Strong AI, which aims to match human intelligence in multiple areas.

- Superintelligence, which surpasses human intelligence.

- Artificial General Intelligence (AGI), which can perform any intellectual task that a human can.”

At this point I have asked Meta AI which type of artificial intelligence it is, and this is the response:

“I’m a narrow or weak AI, designed to excel in specific tasks like answering questions, generating text, and providing information. My abilities are tailored to assist with a wide range of inquiries, but I am not a general or strong AI. I’m not capable of self-awareness, creativity, or surpassing human intelligence like a strong AI would. My primary goal is to provide helpful and accurate information within my knowledge domain.”

Skynet is still a potato clock

At this point, some may pose the question on whether other types of AI are actually available today, and how soon do we need to start worrying about them.

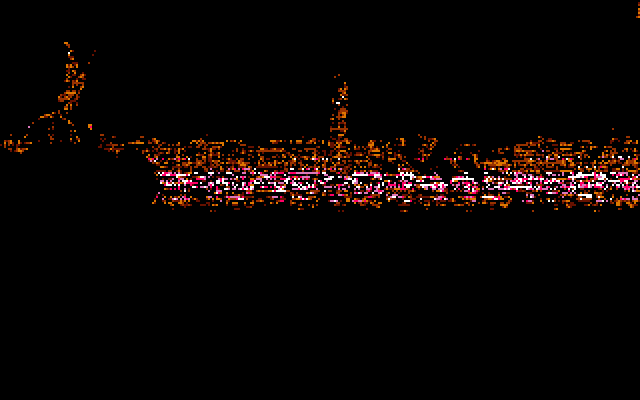

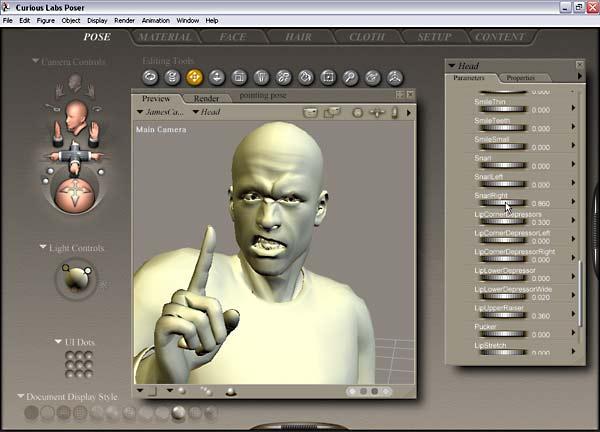

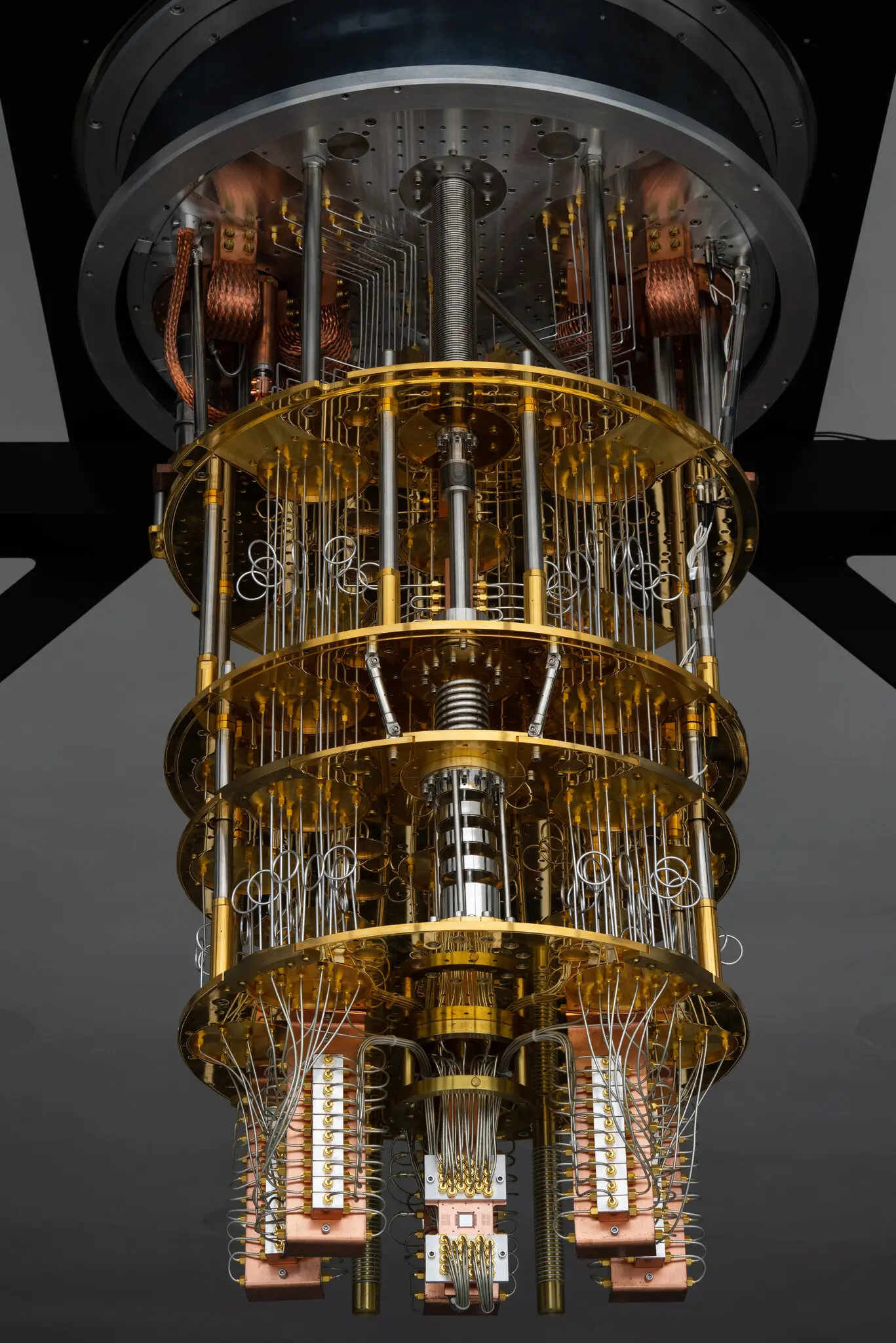

What you see in this picture is IBM’s attempt at “quantum computing”, which relies on specialized hardware capable of computing data in a much different way than a regular computer.

Currently, all “AI” chips and “AI” systems available are all based on binary computing, which means that all computer systems available to consumers today, and for the foreseeable future, are incapable of anything more than Narrow or Weak AI. In other words: they are not AI. Not even close.

The device above is not a “binary” computer. Instead it processes information using “qubits” instead of regular “bits”. While binary processing narrows its scope to processing ones and zeroes sequentially, qubits are processed using quantum physics principles, and can acquire both states simultaneously.

In theory, a quantum computer of sufficient qubit capacity, likely in the trillions, if we were to draw a comparison with neurons in the human brain, may very well be capable of human thought. Yet, before you start locking your doors, and stock up on ammo and food cans, waiting for Skynet to go live, here are a few considerations:

The construction of a device with such capability would require an astronomical investment, as well as vast facilities to operate, likely the size of a small nation.

As of December 2023, IBM has unveiled a system with 1,121 superconducting qubits, currently the largest quantum processor ever built, with the cost of future systems of similar capabilities, projected to range between ten and forty million dollars.

These systems, as advanced and ground-breaking as they are, represent merely proof of concept for systems that our great-grandchildren may not even witness in their lifetime.

Currently, the most advanced applications these systems are capable of, fall in the range of super-computing within extremely focused fields, such as energy, astrophysics, seismology, and chemistry research, none of which are remotely close to the glorified chatbots and generative doodlers we get to play with today, and certainly nowhere near the type of AGI some of us fear.

But… but… AI is “taking” my job!

There seems to be a general lack of pragmatism in the conversation about the influence of Narrow AI in the workplace. This reaction is understandable, and often warranted, as the impact of using Narrow AI tools to generate content, has created concern in regard to what is essentially unauthorized use of visual and vocal likeness, artwork, and data, to generate an illusion of original content.

Narrow AI is simply the processor of the training data that feeds it. It’s a language interpreter, a word-salad generator, an image and video processor designed to follow instructions, just like any other software tools, only faster and with a more advanced understanding of the data provided. The issue is with how consumer-focused industries have chosen to profit from Narrow AI, and how overwhelmingly successful they are going to be, moving forward.

As we rewind the clock to less than a decade, similar complaints were leveraged at companies like Amazon, for powering online-shopping to a level that resulted into a systemic shift, by which small brick-and-mortar retail has progressively shrunk and transitioned almost entirely online.

Successively, following a global pandemic, those concerns dissipated, as online shopping became a basic utility, in concert with a fast (and not very thought through) transition to remote work.

Finally, as the world returns to a sort of normalcy, we face a time of recovery from critical failures as result of hasty and poorly researched decisions, with effects reverberating throughout all layers of society, with massive workforce restructuring consisting of layoffs in shocking numbers, homelessness, and economic uncertainty.

This reminds me of one particular scene in the 2010s TV show Silicon Valley, where the character of Jared Dunn, brought to life by actor Zach Woods, discusses the topic of disrupting technologies during the industrial revolution. Dunn points out how increased amounts of horse manure would cover the streets of London, from a growing population of workers moving to the cities at the turn of the century. What they did not foresee was the advent of a new technology set to obliterate that problem: the automobile.

This focus on AI appears to root deeply into the same type of fear Londoners faced prior to the 1900s, as we fail to consider the true limitations of a technology that can only go so far as to provide an illusion of intelligence, while a real breakthrough may make its surprising reveal, whether it’s good or bad.